Google Health has big ambitions for healthcare AI in 2024. The tech giant is exploring how generative AI can help clinicians in medical decision-making, understand lab and imaging information, aid in early disease detection and provide consumers with a personal health coach based on their Fitbit data.

During Google Health's annual The Check Up event at the company's Pier 57 Manhattan office, executives at the tech giant shared updates and progress on several high-profile AI initiatives. The company is fine-tuning its Gemini model for the medical domain, building a personal health large language model (LLM) that can power personalized health and wellness features in the Fitbit mobile app, developing AI models to help with early disease detection and researching ways that generative AI can assist with medical reasoning and clinical conversations.

Last year at Google Health’s Check Up event, the company unveiled Med-PaLM 2, its LLM fine-tuned for healthcare. In December, Google introduced MedLM, a family of foundation models for healthcare built on Med-PaLM 2, and made it more broadly available through Google Cloud’s Vertex AI platform.

Several health systems are testing out the company's LLM models to build solutions for a range of uses, including supporting clinicians’ documentation and streamlining nurse hand-offs.

The industry is at an inflection point in AI, Karen DeSalvo, M.D., Google's chief health officer, told the audience at The Check Up event on Tuesday.

"As a doctor, it's thrilling to be part of this era at Google, where we can bring our most powerful technology to improve health. Our goal is to make AI helpful so people can lead healthier lives. We do this by building health into the products and services that people already use every day and by creating technology that enables our partners to succeed and our communities to thrive," she said.

"For the first time in my career, I can see clearly a near-term future where everyone everywhere lives a healthier life, not just some people in some places. I'm not alone in this optimism about the promising potential of AI to unlock better health," DeSalvo added. "We're in the early stages of understanding the opportunities these new AI technologies present. It seems clear that in the future AI won't replace doctors, but doctors who use AI will replace those who don't."

But, DeSalvo stressed that AI is just a tool for clinicians to use as part of human-to-human interactions.

"Practicing medicine, I learned that health moves at the speed of trust. I know there are challenges ahead for all of us in getting this technology right, which will require intention and collaboration. Here at Google, we're working to earn trust every day by tackling important health problems in a way that is safe, private, secure and equitable," she said.

Developing a personal health LLM

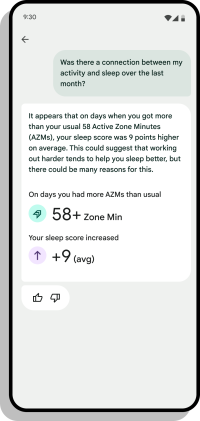

Fitbit and Google Research are working together to build a health LLM to give Fitbit and Pixel device users a personalized health coach based on their own data.

This model is being fine-tuned to deliver personalized coaching capabilities, like actionable messages and guidance, that can be individualized based on personal health and fitness goals. Last fall, the company launched Fitbit Labs which uses AI to provide deeper health insights.

"We're partnering with Google Research, health and wellness expert doctors and personalized and certified coaches to create personal health large language models that can reason about your health and fitness data and provide tailored recommendations, similar to how a personal coach would," Florence Thng, director of product management at Fitbit, during the event. "This personal health LLM will be a fine-tuned version of our Gemini model. This model fine-tuned using high-quality research case studies based on the de-identified diverse health signals from Fitbit will help users receive more tailored insights based on patterns in sleep schedule, exercise intensity, changes in heart rate variability, resting heart rate and many more metrics."

As one example, the model may be able to analyze variations in users' sleep patterns and sleep quality, and then suggest recommendations on the user might change the intensity of workouts based on those insights.

The AI-based coaching capabilities can mimic real-world coaching scenarios, Thng said.

"Imagine a future where you have access to an on-demand personal coach that can provide you with daily guidance. For example, it can analyze variation in your sleep patterns and sleep quality and make recommendations on how you might change the intensity or the time of your workout based on these insights," she said. "We're also keeping responsible AI practices at the center of this new model by thoughtfully designing it and training it on a diverse and curated sets of health and wellness data that's covering a wide range of domains."

The new personal health LLM will power future AI features across the Fitbit portfolio to bring personalized health experiences to users.

Building out AI's assistive capabilities in healthcare

Earlier this year, Google rolled out AMIE (Articulate Medical Intelligence Explorer), a research AI system built on a LLM and optimized for diagnostic reasoning and clinical conversations.

"AMIE is a research system that is based on an LLM. We've trained it to explore how AI can support clinical conversations by asking contextually relevant questions in search of a diagnosis," said Greg Corrado, distinguished scientist, head of health AI at Google Research, during Tuesday's event.

"Empathy is a core part of medicine. So we've designed this model to communicate with respect, explaining things clearly and supporting the individual in their decision-making process. This better reflects real-world clinical consultations where caregivers always ask for the information that will give them more insight in the patient's care in the most humane way possible."

Researchers evaluated AMIE’s conversational capabilities by having an interaction with patient actors in a mock clinical examination.

"We found that the system was capable of dialogue and diagnostic reasoning and conversing with patient actors in a helpful and empathetic way. It's worth noting that these studies were all conducted in a simulated environment, and they aren't necessarily representative of everyday clinical practice," Corrado said. " While we think these early results with AMIE are very promising, we need to understand whether a system like AMIE can be of real use in a real clinical setting."

In a randomized comparison with real primary care clinicians performing the same simulated text consultations, AMIE rated higher than or on par with these consultations when measured for traits like diagnostic accuracy, empathy and helpful explanation.

Researchers will now start testing LLM with a healthcare organization to see its helpfulness for clinicians and patients, Corrado said.

"This research is about exploring the art of the possible in healthcare and if it is possible, whether and how to build it safely, equitably, and in an assistive way," he added.

This AI research represents "the next frontier of healthcare innovation," Corrado said.

"We truly are in a new era of AI innovation at Google. The Gemini family of models is unlocking new capabilities to power this era. We've fine-tuned Gemini models for the medical domain, and have been evaluating their performance across a wide range of tasks. We've seen gains in advanced reasoning, in long context windows and in multi-modality."

Fine-tuning Gemini model for the medical industry

Often, important information about patients' histories is often buried deep in a medical record, making it difficult to find relevant information quickly.

Google's latest research demonstrated that a version of the Gemini model, fine-tuned for healthcare, showed improved performance on the benchmark for the U.S. Medical Licensing Exam–style questions at 91.1%, and on a video data set called MedVidQA.

Going beyond medical exam knowledge, Google teams are researching how this Gemini model can enable new capabilities for advanced reasoning, understanding a high volume of context and processing multiple modalities.

At HIMSS24, Google announced the launch of MedLM for Chest X-ray. This gen AI model can help with classification of chest X-rays for operational, screening and diagnostics use cases.

"In medicine, context matters, and that's why we've expanded our evaluations to investigate long context window tasks, asking models to sift through large quantities of information like text, images, audio or video to extract relevant information," Corrado said.

"Gemini models were built from the ground up to be multimodal. And after all, medicine is also inherently multimodal," he added. "To make the best care decisions, healthcare professionals regularly interpret signals across a plethora of sources, including medical images, clinical notes, electronic health records and lab tests. To be genuinely helpful, AI should be able to follow a care teams' reasoning as it crosses the boundary between these different information types."

A radiologist interpreting a chest X-ray must create a report about the image, describe the findings and answer questions from the referring physician, he noted. The physician in turn, will need to take actions based on that report.

"It would be a big help if AI could assist the radiologist by spotting errors or omissions or even helping to draft the report itself. As a proof of principle, we built a model that can generate radiology reports based on a set of open access to de-identified chest X-rays.

Researchers are also seeing promising results from the model on complex tasks such as report generation for 2D images like X-rays, as well as 3D images like brain CT scans, representing a step-change in our medical AI capabilities, executives said.

"This emphasizes that it's time to evaluate AI's ability to assist radiologists in real-world report generation workflows," Corrado said.